Artificial Intelligence Mistakes

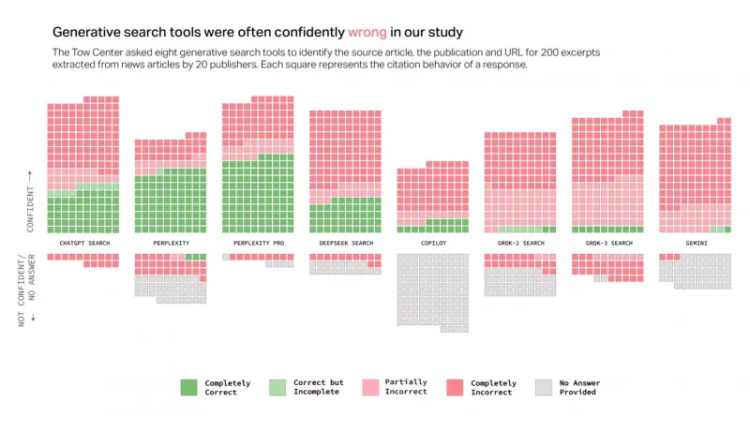

Anyone who uses AI models knows that AIs often screw up. But it would be interesting to know what percentage of errors different AIs make. The Tow Center research group conducted such a study : they looked at eight AI search engines, including ChatGPT Search, Perplexity, Perplexity Pro, Gemini, DeepSeek Search, Grok-2 Search, Grok-3 Search, and Copilot, tested each for accuracy, and recorded how often the models failed to respond.

The researchers randomly selected 200 news articles from 20 news publishers (10 from each). They made sure that each article appeared in the top three Google search results when using the quoted excerpt from the article. They then ran the same query in each of the AI search tools and assessed the accuracy of the search by whether A) the article, B) the news organization, and C) the URL were correctly identified.

The researchers then rated each search from "completely correct" to "completely incorrect." As the chart below shows, other than both versions of Perplexity, the AIs did not perform well. Collectively, the AI search engines were wrong 60% of the time. What's more, these incorrect results were reinforced by the AIs' "confidence" in them.

Interestingly, even after admitting that it was wrong, ChatGPT followed up that admission with even more fabricated information. It appears that the AI is programmed to respond to every user query at all costs. The researcher’s data supports this hypothesis: ChatGPT Search was the only AI tool that responded to all 200 article queries. However, its accuracy was only 28%, and it was completely inaccurate 57% of the time.

And ChatGPT isn't even the worst of the bunch. Both versions of X's Grok AI performed poorly, with Grok-3 Search being 94% inaccurate. Microsoft's Copilot wasn't much better, refusing to answer 104 of the 200 queries. Of the remaining 96, only 16 were "completely correct," 14 were "partially correct," and 66 were "completely incorrect," for a rate of about 70% inaccuracy.

The companies that create these models charge the public between $20 and $200 per month for access. Perplexity Pro ($20 per month) and Grok-3 Search ($40 per month) answered more queries correctly than their free versions (Perplexity and Grok-2 Search), but had significantly higher error rates.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)