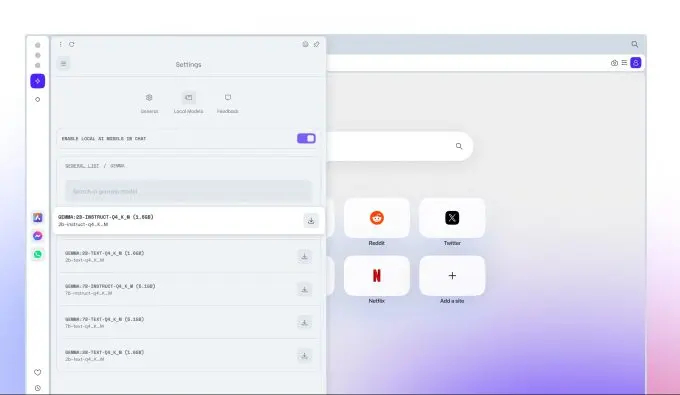

Opera allows users to download and use LLM locally

Opera has announced the ability to download and use LLM locally . Users are offered more than 150 models, including Llama, Gemma and Vicuna. Each of them weighs more than 2 GB.

Opera browser company has announced a new feature for users - downloading and using LLM locally on their computers. Offering over 150 models from over 50 families, this feature is new to Opera One users.

At the moment, all models available for use are part of the Ollama library. Among them: Llama from Meta*, Gemma from Google and Vicuna. However, it is planned to expand the available sources in the future.

It's worth noting that each model takes up more than 2GB of local disk space, so users should consider the available space on their devices to avoid storage issues.

Jan Standal, vice president of Opera, emphasized that this is the first time that the browser provides access to local LLMs from different manufacturers. He also noted that the size of models can decrease as they are specialized to perform specific tasks.

Since 2023, Opera has been actively experimenting with AI-based features. The sidebar assistant Aria launched in May 2023, and an iOS version was introduced in August. In 2024, Opera announced the creation of an AI-powered browser with its own engine for iOS.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)