Researchers have invented a new type of neural networks - KAN

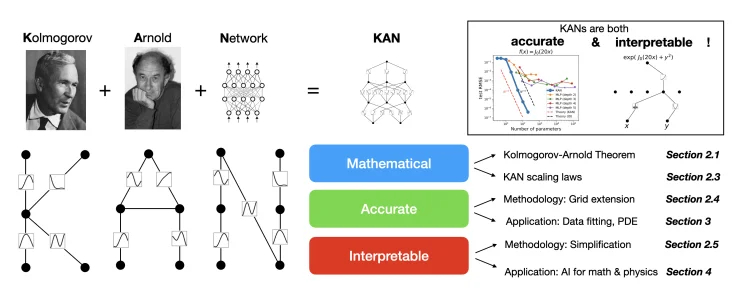

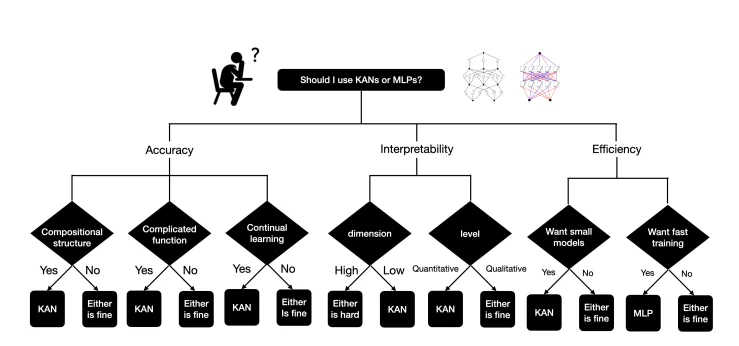

Scientists have invented a new type of neural networks. Kolmogorov-Arnold networks (KANs) are superior to MLPs in terms of accuracy and interpretability. However, they are intuitively visualized and can easily interact with human users.

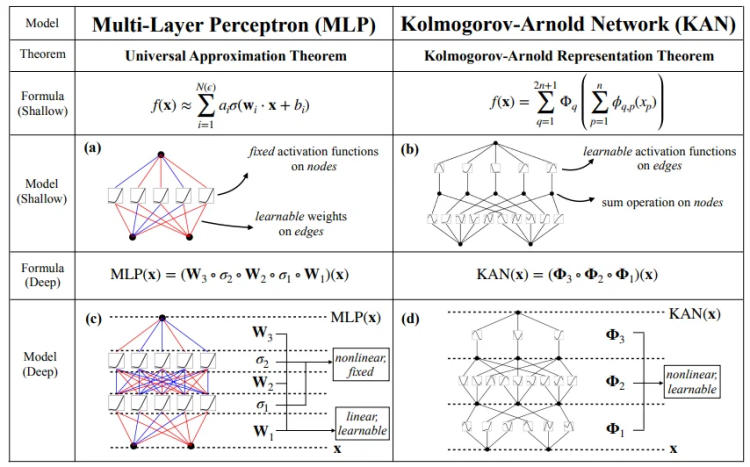

Multilayer perceptrons (MLPs), also known as fully connected feedforward neural networks, are the fundamental building blocks of modern deep learning models. Despite their widespread use, MLPs have significant drawbacks. For example, in transformers, MLPs consume almost all parameters, are not built-in, and are generally less interpretable (relative to levels of attention) without post-analysis tools.

As a stronger alternative to MLP, MIT researchers invented the Kolmogorov-Arnold Neural Network (KAN).

How do KANs work?

Like MLP, KAN have fully connected structures. However, while MLPs place fixed activation functions on nodes (neurons), KANs place learnable activation functions on edges (weights). As a result, KANs do not have linear weight matrices at all: instead, each weight parameter is replaced by a learnable 1D function parameterized as a spline.

KAN nodes simply sum the incoming signals without applying any nonlinearities. Scientists note that the weight parameter of each MLP becomes a spline function of KAN. However, KANs allow calculations to be performed on much smaller graphs than MLPs, so they do not require increased overhead.

Advantages of KAN

Small KANs can achieve the same or even higher accuracy than much larger MLPs when processing data and solving PDEs. Moreover, both theoretically and empirically, KANs exhibit more efficient neural scaling principles compared to MLPs.

Also, for ease of interpretation, KANs can be visualized intuitively and can easily interact with human users. Researchers have demonstrated with examples from mathematics and physics that KANs are useful tools for helping scientists (re)discover mathematical and physical laws.

Disadvantages of KAN

Currently, the biggest disadvantage of KAN is its slow learning curve. These networks operate 10 times slower than MLP with the same number of parameters. However, experts note that they have not made efforts to optimize the efficiency of KAN, so they consider the slow learning process to be a technical problem that needs to be solved in the future.

The scientists highlight that KANs represent a promising replacement for MLPs, opening up new horizons for improving existing deep learning models that rely heavily on MLPs.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)