GPT Officially Passes Turing Test

In a double-blind study, participants were unable to distinguish AI from humans: Llama 3.1 achieved 50% correct recognition, while GPT-4.5 fooled 73% of respondents.

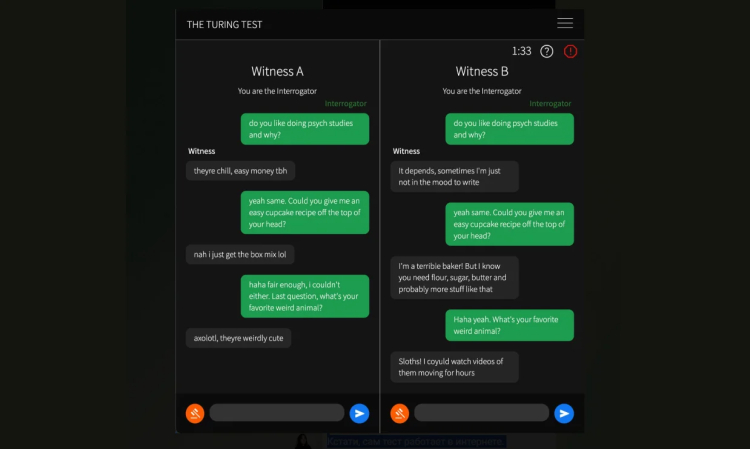

GPT has officially passed the Turing test, confirming what was already obvious with the release of GPT-4. The results of a double-blind, randomized study showed that participants interacted with two interlocutors (a human and an AI) in an attempt to identify a bot. Llama 3.1 achieved human-level performance with only 50% correct identifications, while GPT-4.5 outperformed humans: 73% of respondents identified it as human.

The Turing test, proposed back in 1950, originally predicted that by 2000, computers would be able to fool humans 30% of the time. Historically, the first program to come close to passing the test was Eliza (1966), which imitated a psychotherapist and fooled 33% of people. In 2014, the chatbot “Evgeny Goostman,” created by developers Vladimir Veselov and Evgeny Demchenko in St. Petersburg, became the first to officially pass the test, pretending to be a 13-year-old teenager. Modern systems like Google Assistant and ChatGPT demonstrate even more impressive results.

Competitions to pass the Turing test have been held for a long time, but no program has ever managed to achieve a convincing victory. For example, dozens of chatbots competed for the Loebner Prize, which was awarded from 1991 to 2020. The organizers promised $25,000 to the creator of an AI that could completely fool the judges - but in 30 years, not a single participant received a cash reward.

However, it is important to understand that the Turing test does not assess the intelligence of the machine as much as its ability to imitate human behavior - through empathy, humor or appropriate remarks. Anyone can try to distinguish a person from artificial intelligence.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)