OpenAI Starts Using Google TPU Chips, Reducing Dependence on Nvidia

OpenAI has pioneered the use of Google's Tensor Processing Units to power ChatGPT and related services, reducing reliance on Nvidia and Microsoft solutions.

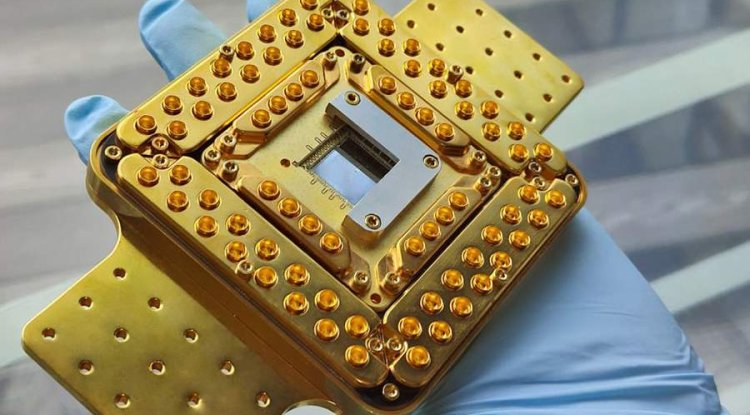

OpenAI has started using Google’s TPU AI accelerators to power ChatGPT and related services, marking the first step toward reducing its reliance on Nvidia chips and Microsoft infrastructure. According to The Information, the company is leasing TPUs from Google Cloud to reduce the cost of inference — the process of generating answers after a model is trained. The move could boost TPUs’ status as a cost-effective alternative to Nvidia’s GPUs, which dominate the AI accelerator market.

OpenAI previously relied on Nvidia hardware through partnerships with Microsoft and Oracle, but has now added Google to its list of suppliers. However, sources say OpenAI is not getting the most powerful versions of the TPUs — Google is reserving the most advanced chips for internal tasks, including developing its own Gemini language models. However, even access to earlier versions of the TPUs allows OpenAI to diversify its infrastructure amid growing demand for AI chips. In April, Google announced its seventh-generation TPUs, pitching them as a competitive solution to NVIDIA chips. Supply chain issues and the inflated cost of NVIDIA GPUs are fueling demand for alternatives.

It’s unclear whether OpenAI will use Google’s TPUs to train models or just for inference. However, in an increasingly competitive and resource-constrained environment, a hybrid infrastructure gives the company more flexibility to scale its computing.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)