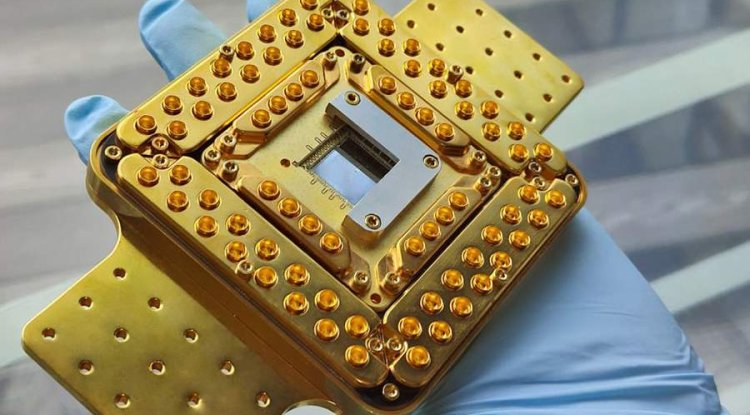

Microsoft Announces New Security System to Filter Malicious AI Output

Microsoft has introduced a security system to filter out malicious AI output. Five new security features will be automatically implemented in GPT-4 and Azure AI Studio.

Microsoft unveiled the introduction of new tools to manage AI security. Users will soon have access to five new features in Azure AI Studio. Three of them are already available, and two will be added later. The current package includes options such as request protection, risk and security monitoring, and security assessment.

Prompt shield is designed to block attempts by users or external documents to deceive the model in order to obtain malicious results.

Risk and safety monitoring is a set of tools that allows you to identify and neutralize harmful consequences in real time. This feature also helps developers monitor the status of model content filters.

Safety evaluations allow you to analyze model results for consistency and safety, and create test datasets to improve manual model testing.

Azure will also soon offer security message template generation. The final element of the updates will be a validity detection feature based on AI suggestions, which analyzes the results for obvious incorrectness or lack of logic.

The described security management functions will be automatically added to the GPT-4 model. Microsoft is committed to focusing on security to prevent errors that often occur when working with AI.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)