Google DeepMind Unveils Gemini Robotics — New AI Models for Robotics

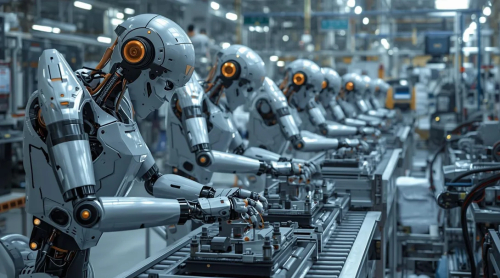

Google DeepMind has announced two new models based on Gemini 2.0, Gemini Robotics and Gemini Robotics-ER, which expand the robots' capabilities to perform real-world tasks.

Google DeepMind has unveiled two new Gemini 2.0-based AI models for robotics. The models, Gemini Robotics and Gemini Robotics-ER, are designed to enhance robots' ability to perform real-world tasks.

Gemini Robotics is an advanced vision-language-action (VLA) model that adds physical actions to Gemini 2.0’s multimodal capabilities, allowing direct control of robots. Gemini Robotics-ER, on the other hand, offers improved spatial understanding, allowing roboticists to use Gemini’s embodied reasoning (ER) capabilities in their projects.

The company is collaborating with Apptronik to create a new generation of humanoid robots, and is also working with trusted testers to further develop the technology. These models open up new perspectives for applying AI in the physical world, making robots more useful and efficient.

To be useful, AI robots must be versatile (adaptable to different situations), interactive (quickly respond to changes), and dexterous (able to manipulate objects). Gemini Robotics takes a significant step forward in these areas, bringing the creation of versatile robots closer. Google emphasizes that the new models make it possible to create robots that can independently analyze and perform tasks in the physical world. This includes moving, solving logical problems, performing household functions, interacting with people, and analyzing changes in the environment.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)