Google announces it is injecting AI into malware code.

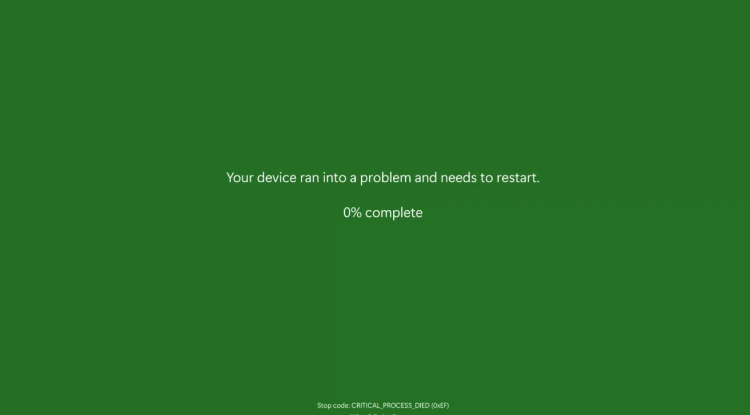

Google Threat Intelligence Group experts report the emergence of a new type of cyberattack in which attackers inject artificial intelligence into malware code. This allows viruses to adapt to defense systems.

The PromptFlux dropper, written in VBScript, is cited as an example. It saves modified copies of itself in the Windows startup folder, on removable media, and on network drives. Its key module, Thinking Robot, was integrated with the Gemini language model API, accessing it for new ways to evade antivirus software. This program's access to the Gemini API has already been blocked, and its connection to known hacker groups has not yet been established.

Other similar developments cited by the researchers include FruitShell, PromptSteal, QuietVault, and PromptLock. These products use language models to generate commands, search for data, and encrypt it across various operating systems.

Experts emphasize that the malware listed is experimental, easily detected by traditional security tools, and is not yet being used by hacker groups. However, these developments themselves represent a significant breakthrough in the creation of autonomous and adaptive malware, and AI-enabled attacks will become significantly more common in the future.

Google has also documented numerous cases of well-known hacker groups using the Gemini model for their own purposes. China's APT41 used it to refine its framework and obfuscate code, while Iran's MuddyCoast used it to debug malware. North Korea's Masan group used AI to steal cryptocurrency, create deepfakes, and phishing emails in multiple languages, while the Pukchong group used it to generate attack codes for network equipment and browsers.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)