New startup mATX competes with Nvidia in AI chips

Nvidia has a new competitor. mATX specializes in developing equipment tailored for LLM. The company's chips allow you to train GPT-4 and run ChatGPT with the budget of a small startup.

New startup mATX competes with Nvidia. He specializes in developing LLM-optimized hardware to increase computing power.

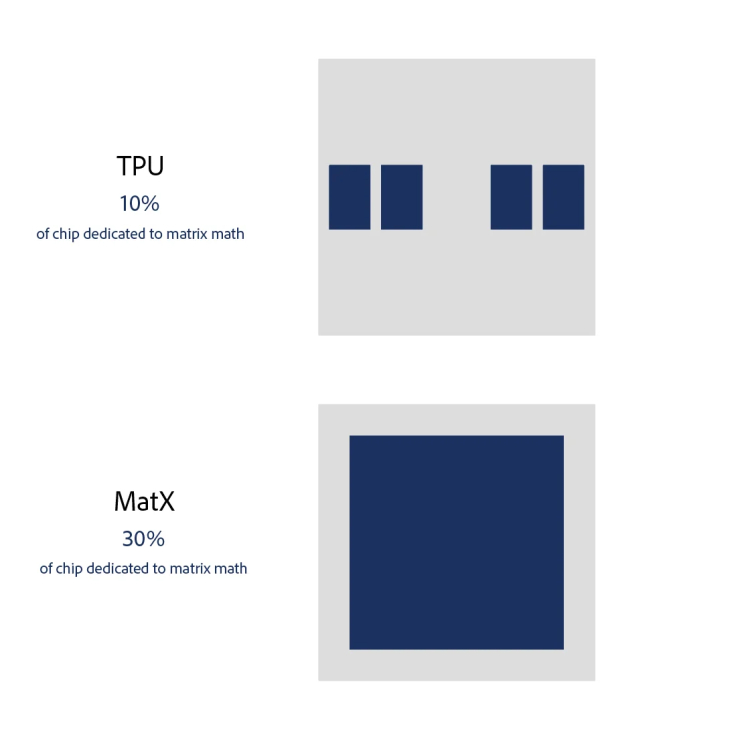

mATX chips make it possible to train GPT-4 and run ChatGPT even on a small startup budget. Unlike other designs that work equally across all models, mATX targets each transistor to maximize performance.

mATX chips are designed to save resources when processing large volumes of input data and production output. They achieve peak performance with workloads such as large transformer-based models, 20B+ parameters, and inference involving thousands of concurrent users.

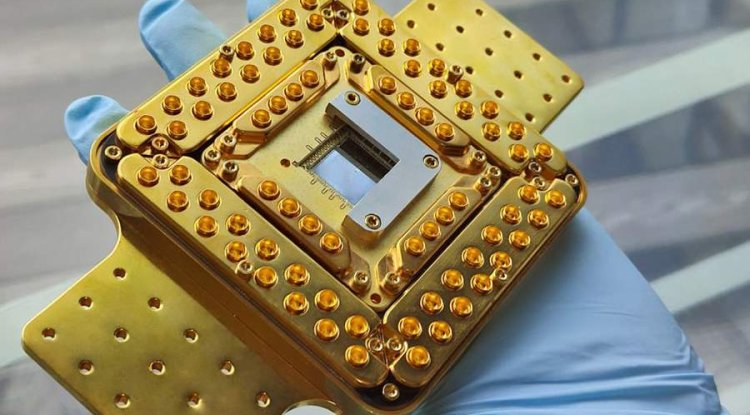

The startup team consists of specialists who previously worked on ML chips, ML compilers and LLMs at companies such as Google and Amazon. The company's CEO focused on driving efficiency at Google PaLM, where he developed and implemented the world's fastest LLM inference software. And the technical director worked on the architecture of one of Google's ML chips and TPUs.

The company has already raised $25 million in investment from the CEOs of Auradine and Replit. In addition, she has additional funding from leading AI and LLM researchers.

Share

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Transfer/ Postings Senior Superintendent Police Hyderabad [Notifications]](https://pakweb.pro/uploads/images/202402/image_100x75_65d7bb0f85d5f.jpg)

![Amazing Text Animation Effect In CSS - [CODE]](https://pakweb.pro/uploads/images/202402/image_100x75_65d79dabc193a.jpg)